Pandas Profiling (not an official part of pandas, it is a pypl package) provide summary statistics, calculate important stats, beyond the basic df.describe(). It has 7000+ stars and 1000+ forks. It can calculate type inference, histogram, missing values, correlation automatically.

Wednesday, September 1, 2021

Wednesday, August 25, 2021

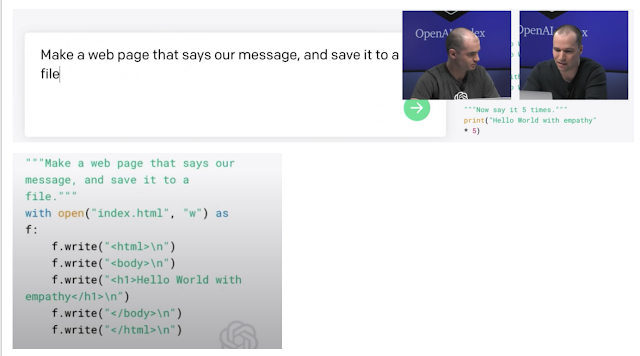

OpenAI Codex - Uniqtech Guide to code generation, natural language processing, GPT-3

Need to brush up on GPT-3 knowledge? Check out our GPT-3 knowledge landing page. It's free. Log in to access for free. Uniqtech Guide to understanding GPT-3. Scroll to the bottom to read all about Codex.

Uniqtech Guide to OpenAI Codex Basics

Link to our knowledge flash cards:

- OpenAI Codex Basics - Uniqtech Guide to GPT-3 [public, open access]

- Intermediate Codex - Uniqtech Guide to GPT-3 Codex code generation [public, intermediate]

- Advanced Codex notes - Uniqtech Guide to OpenAI GPT-3 Codex [pro, paid subscriber]

01 Copy and paste first grade math question from a worksheet

02 Use the question as a prompt and get an answer from OpenAI Codex

03 Codex translates the prompt from English to Python Code

04 Codex generates a numeric answer to the math question.

You can copy and paste the code into a notepad to customize.

|

| OpenAI Codex answers first grade math questions Uniqtech Guide to Codex |

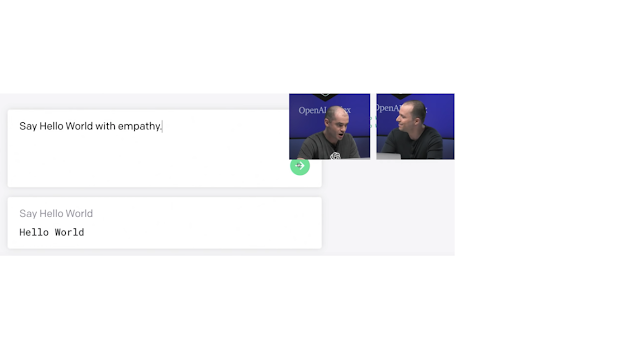

Codex says Hello World

Wednesday, July 28, 2021

Friday, June 18, 2021

Job posts visualizations : Product Manager versus Program Manager versus Data Engineer

Our data visualization for job postings of Product Manager, Program Manager and Data Engineer.

Click the image to view a larger version, styling our logo. Keep in mind, our initial analysis is limited as we are just starting to collect big tech data. As our dataset grow, these insights may evolve. The difference between product and program management is still subtle, but in reality they are very different positions. We have friends doing both. The team hopes to see the visualization to be more informative soon.

Saturday, May 1, 2021

Imputation Strategies

Imputation is used when handling pre-processing training data in machine learning. It is useful in handling missing data.

Installation - Machine Learning Deep Learning Prerequisites

import numpy as np # linear algebra

import seaborn as sns # data visualization, API

from bs4 import BeautifulSoup as soup # web scraping

Install packages based on requirements.txt using command line

$sudo pip install -r requirements.txt

Other commonly used libraries:

Other scikit-learn import statements you might see in the wild:

from sklearn.metrics import roc_auc_score

from sklearn.ensemble import RandomForestClassifier

from sklearn.naive_bayes import GaussianNB

from sklearn.neighbors import KNeighborsClassifier

from sklearn.tree import DecisionTreeClassifier

from sklearn.ensemble import AdaBoostClassifier

from sklearn.ensemble import GradientBoostingClassifier

from sklearn.linear_model import LogisticRegression

Machine Learning in the Cloud

Workflow : How to generate or collect, preprocess and train with data.

Sample tasks :

- train machine learning models in google cloud.

- Data collection in Google Cloud or on Amazon Web Services (AWS).

- Analyze, preprocess training data.

- Clean, analyze data and present your findings

- Pre-processing data using python

- Train a basic machine learning model

- Deploy a model for prediction using a REST API